Adapted from https://thatcrmblog.wordpress.com/2024/05/05/using-copilot-to-navigate-microsoft-licensing/

For those of us who read medical papers, it can be rough sailing. The texts are written for medical professionals and not the rest of us. There is the excellent D-Coded Diabetes Research but they have only D-Coded a handful of papers, so what do we do about the rest?

I wondered if AI could come to our aid and it turns out Bing Copilot can be really useful.

Bing Copilot can Read PDFs

As well as answering questions on web sites, you can open a PDF file in Edge, and Copilot will review the content and answer questions on it, almost instantly. At the time of writing, this is the link for the Type 1 Consensus Report from the EASD and ADA. From here you can open a PDF version of the full report. At 37 pages, I am more than happy for Copilot to navigate the jargon, footnotes and tables instead of me.

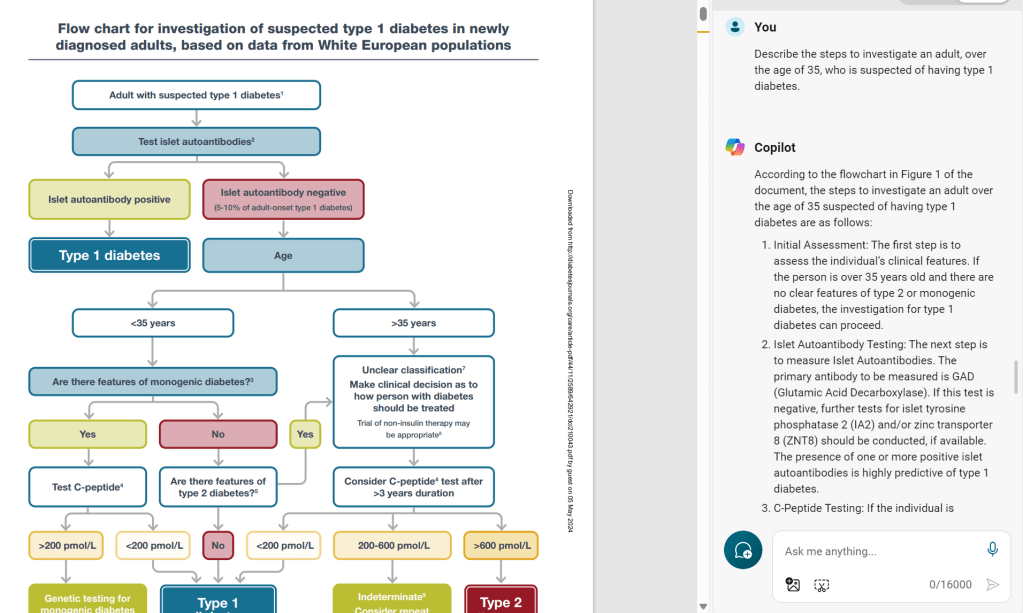

So how did it go? The first test was to see if it could read a flowchart. Browsing through the document, I found one describing the diagnosis of type 1 diabetes. Asking what to do for an adult over the age of 35, it did a pretty good job of walking through the process.

My next test was to read a table and return results. It also passed with flying colours and even gave additional context.

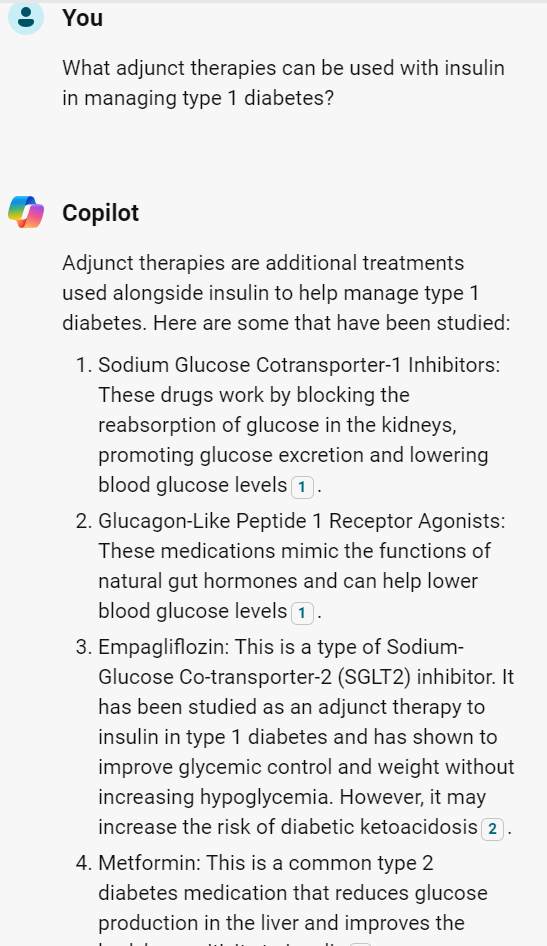

While the above questions were a little artificial to establish it could read the document accurately, we can ask more practical questions such as what medications can be taken to help manage type 1 diabetes (other than insulin).

We need to be careful though as, if we do not specify to use the document exclusively, it will go off to the internet which may or may not be desirable.

Asking this question without confining it to the document brings back a slightly different list of proposed medications.

and while this list is not endorsed by the EASD/ADA consensus we can still assess the sources if we want to explore more.

So What if Copilot Gets it Wrong?

I figured the best person to ask was Copilot themselves and, sure enough, they confirmed it is possible for it to get it wrong and gave sensible advice as a consequence.

As discussed in my article on AI from my other blog, common sense, as provided by a human, should prevail. As Edge Copilot provides sources (and links to where it read the information in the document) it makes sense to double-check these before putting one’s health on the line, using a carefully curated health care team as a sanity check. However, as a tool for quickly reading through a jargon-filled document and pointing me to the key places of interest on a specific topic, I see this as a very useful tool.